Services

Models and applications

We specialize in creating solutions for clients who need to extract key information from their processes. With large quantities of data available in databases, we can draw conclusions, predicts events, condense information, impute missing data, find trends, etc. in order to make smart decisions and save human resources.

Using various objectives (regression, classification, clustering, etc.) and models (neural networks, Gaussian processes, auto encoders, etc.) to respond to questions such as:

- When will my system fail to anticipate and prevent the failure?

- How can I minimize risks when giving out loans and credits to clients?

- Where is the highest likelihood of finding a mineral for mining?

- Where to focus efforts to mitigate forest fires and deforestation?

- How to optimize the production of agricultural products with respect to climate?

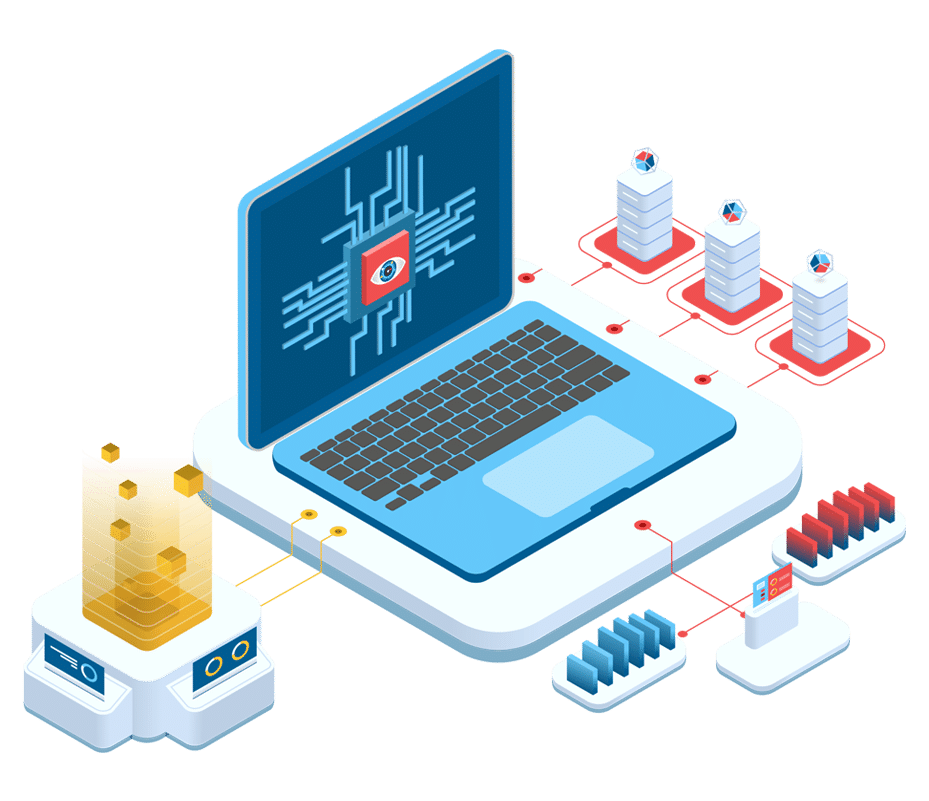

Big Data

When we define big data, we use the three Vs: volume, velocity, and variety. In order to manage a high amount of data flow, new techniques have marked the recent past in solving parts of the problem. Data is recorded in sensors or data sources, where it is extracted, transformed, and loaded (ETL) into a data lake or warehouse (e.g. SQL, Snowflake, Databricks). The data is then processed by managing cluster systems where separate nodes work jointly and concurrently to feed a model. This can be done with software such as Hadoop (incl. Spark), Kubernetes, Azure and many others.

When we define big data, we use the three Vs: volume, velocity, and variety. In order to manage a high amount of data flow, new techniques have marked the recent past in solving parts of the problem. Data is recorded in sensors or data sources, where it is extracted, transformed, and loaded (ETL) into a data lake or warehouse (e.g. SQL, Snowflake, Databricks). The data is then processed by managing cluster systems where separate nodes work jointly and concurrently to feed a model. This can be done with software such as Hadoop (incl. Spark), Kubernetes, Azure and many others.

Computer Vision

Deep learning neural networks have made great progress in gaining higher-level understanding of images and video. The domain includes advances in image classification, object detection, and object tracking, allowing new models to interpret e.g. medical images, satellite imagery, road scenery for automatically-driven cars or to be used for facial recognition, reading text in images, and so on. It is a fast moving field with recent deep convolutional neural network (CNN) models including Yo Only Look Once (YOLO), EfficientNet + Feature Pyramid Network (FPN) using TensorFlow or PyTorch.

Deep learning neural networks have made great progress in gaining higher-level understanding of images and video. The domain includes advances in image classification, object detection, and object tracking, allowing new models to interpret e.g. medical images, satellite imagery, road scenery for automatically-driven cars or to be used for facial recognition, reading text in images, and so on. It is a fast moving field with recent deep convolutional neural network (CNN) models including Yo Only Look Once (YOLO), EfficientNet + Feature Pyramid Network (FPN) using TensorFlow or PyTorch.

Prediction

A classic field in Machine Learning is classification and regression, usually for interpolation (imputation) or extrapolation (prediction) of data. Widely used toolkits include scikit-learn, a complete Python toolkit with algorithms for classification; clustering; regression; and dimensionality reduction, and other general numerical computing tools such as NumPy and SciPy. Data is loaded and handled using Pandas and can be run using Jupyter Notebooks.

We have done extensive work on a Gaussian process regression toolkit widely used by industry and academia.